- Published on

Gamified ASD Intervention: Aiding Emotional Development

- Authors

- Name

- Abdul Rafay Zahid

- linkedin/abdul-rafay-zahid

Introduction

Autism Spectrum Disorder (ASD) is a psychological disorder that affects people’s interactions and communications with each other. People diagnosed with ASD can have deficiencies in different areas. For example, some people may have trouble communicating, while others may have advanced communication skills. Some require more help in their daily lives while other people can live and work with minimal support.

Our Proposal “Aiding Emotional Development in Autistic Children Through Gamified Interventions” aims to target those children with ASD who find it hard to recognize and express emotions. They are known to struggle with showing concern for others and relating to their experiences. They are also known to have a general deficit in interpreting others’ mental states based on their facial expressions and tone of voice.

The Gamified Approach

This project proposes a gamified approach that harnesses human-computer interaction (HCI) technologies to deliver educational content to children affected by ASD. By integrating HCI-based emotion recognition tools with interactive games, the project aims to create an engaging and effective learning environment. This approach seeks to empower ASD-affected children to improve their emotional understanding and expression, which, in turn, is expected to boost their overall social communication abilities.

Although research has been conducted in this field and we even look at a handful of papers in our proposal that aim to develop a handful of skills through serious games in children with ASD, there is a general lack of actual real-life tests in all of them. We aim to not only create a serious game designed to improve emotional skills but also to test it on children with ASD to observe patterns and improvements. We tend to record these results and build further upon them to refine our project. This is because Autism is an extremely variable disorder. The word ’spectrum’ is used to show how autism is experienced differently by different people. So to cater to most if not all children with varying levels of autism, testing is considered crucial.

Technical Architecture

We aim to be able to aid the performance of children who suffer from ASD on their emotional development. For this to be successful, accurate evaluation of their emotional communication is mandatory. Our application’s computer vision pipeline begins from image processing of their live video feed to labeling of their emotional expression using a machine learning model to this end. Feature extraction and region of interest segmentation will be carried out utilizing a simple OpenCV library wrapper which is the industry standard for image processing applications.

Data Cleaning and Fine-Tuning

Data Cleaning:

- Objective: Ensure the dataset’s quality and consistency for effective model training.

- Processes:

- Quality Control: Remove images with poor lighting, blur, or other quality issues.

- Label Consistency: Address any discrepancies or biases in emotion labels.

- Standardization: Standardize image sizes and formats for consistency.

Fine-Tuning:

- Objective: Adapt a pre-trained model to the specific emotion recognition task.

- Processes:

- Transfer Learning: Utilize the knowledge learned by the pre-trained model on a different task.

- Task-Specific Training: Fine-tune the model on the emotion recognition task using the cleaned and augmented dataset.

Model Deployment:

- Input: Trained and evaluated model.

- Process: Deploy the model to a production environment or integrate it into the target system (Unity game). Implement real-time or batch processing capabilities.

- Output: Deployed model ready for inference.

Lesson Providing Module

While the user facing-facing application is divided into three modules: ”Emotion Expression,” ”Emotion Recognition,” and ”Emotion Rejection”; a root module connects them which is responsible for serving lessons to the game. This is a singleton class that is active as an instance throughout the run-time of the application and acts as a sort of scheduler for lessons.

Depending on the level selected, a series of scenarios are served so that the unity application can display them to the user. The decision of which lesson to serve is decided algorithmically based on the child's progress. The unit is updated between each scenario as failed scenarios may enter the list again. The modules are connected through a central controller that manages the flow of data between the modules. The controller is responsible for processing the data collected by the modules and providing feedback to the child. We also use a cloud-based database to store user data and introduce new or updated lessons to the scheduler.

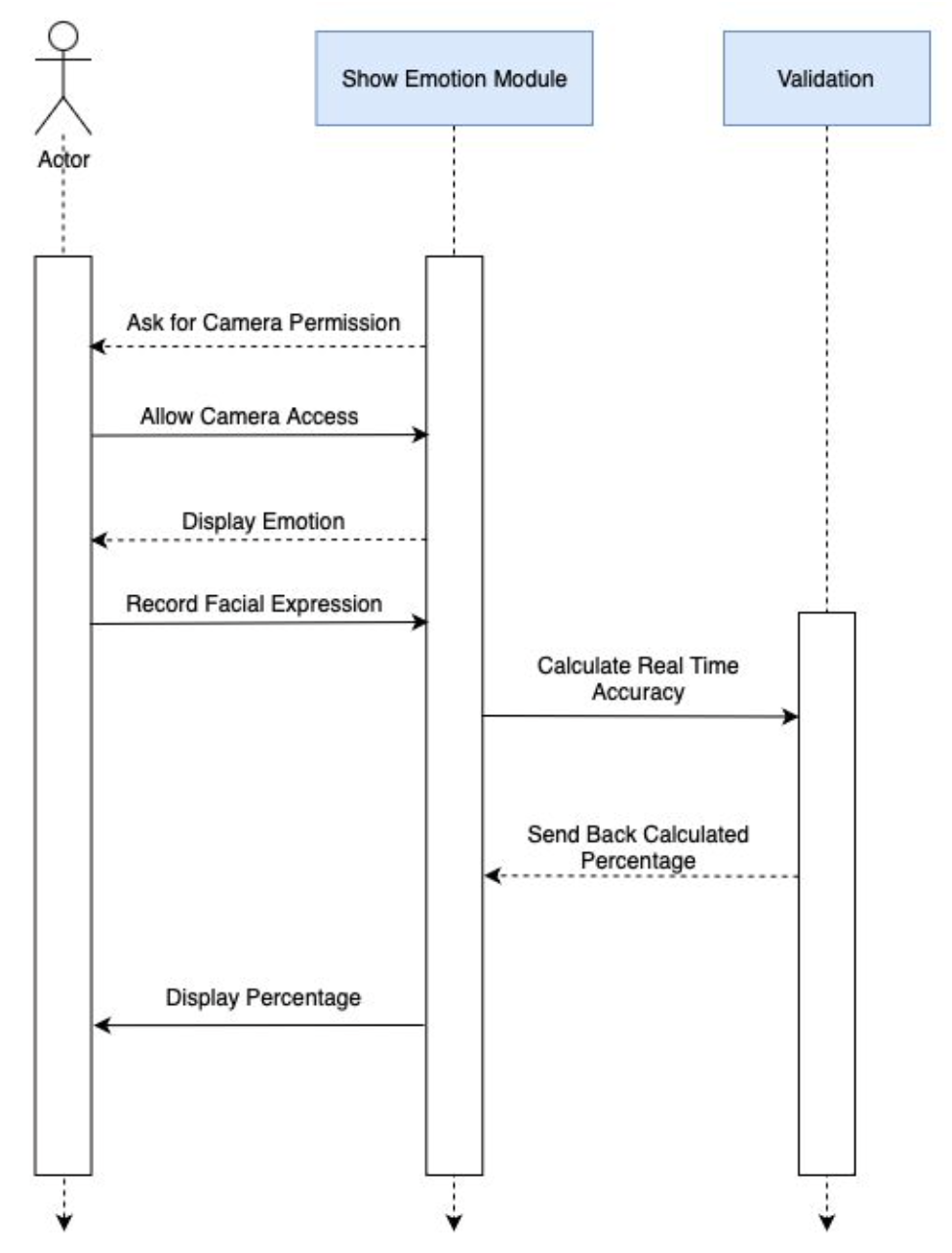

Show Emotion Module

In the implementation of the Show Emotion Module, we embark on a sophisticated real-time emotion expression assessment utilizing camera permissions in Unity. The user is presented with an emotion prompt, and with the assistance of a machine learning module, their real-time expression is evaluated as they mimic the displayed emotion. For the machine learning aspect, we propose the incorporation of well-established models such as OpenPose for precise pose detection and EmoReact for emotion recognition. These models can be pre-trained on comprehensive datasets like AffectNet or CK+, ensuring robust performance.

Our strategic approach involves providing users with immediate feedback through a percentage display, indicating the correctness of their expression. A color-coded theme enhances the user experience:

- Green: Success (above 80%)

- Orange: Room for improvement (50% - 80%)

- Red: Incorrect (below 50%)

This visual cueing system not only reinforces positive outcomes but also encourages users to revisit previous modules for further enhancement, fostering a continuous learning loop. Throughout development, we prioritize rigorous testing and user feedback to refine the system’s accuracy and user interface.

Model Implementation

In the realm of model implementation for emotion recognition, several options stand out, each with its unique strengths and considerations.

Dataset

The image dataset used contained 28,821 Training images and 7,066 Testing images. These images are labelled as 7 emotions expressed by people including 'Surprise', 'fear', 'angry', 'neutral', 'sad', 'disgust', 'happy'. The standardized format and variety of expressions made it a valuable resource for advancing facial recognition technologies.

Models Considered

1. VGGFace

VGGFace is a model that is utilized for face recognition and is adaptable for emotion recognition tasks. It is well known for its high accuracy in recognizing facial features. Although the model is not specifically trained on children’s datasets, it can be employed for tasks involving multiple emotions, and finetuning for children is trivial. The model’s architecture, characterized by a large size, makes it suitable for capturing intricate facial expressions. If Unity Integration is required, some custom implementation based on Unity’s ML-Agents or other compatible frameworks must be used.

2. Facenet

Facenet was uniquely designed for face recognition and offers adaptability for emotion recognition through fine-tuning its last layers. Facenet achieves high accuracy with a moderately large model size. It also lacks specific training on children datasets, and custom implementation is needed for Unity integration. The number of emotions it can recognize is adaptable based on fine-tuning, making it versatile for different tasks.

- Accuracy: 0.55

- ROC: 0.9

3. ResNet

ResNet (specifically ResNet-50 or ResNet-101), is also a popular choice for emotion recognition. It can be fine-tuned to adapt to specific emotion recognition tasks just like facenet. It has a large model size and high accuracy. Furthermore, ResNet requires custom implementation for Unity integration. While not specifically trained on children’s datasets, it is nonetheless easily adapted and therefore suitable for our purpose.

- Accuracy: 0.64

- ROC: 0.95

Model Comparison & Selection

Based on the above metrics, ResNet outperforms both VGGFace and Facenet in terms of the accuracy and ROC score. This is an indication of better performance in identifying the correct emotion. Facenet performs better than VGGFace but still lags behind Resnet. Thus we choose Resnet to be used for the emotion expression module.